Ontology and Concept Services

SKOS vs. OWL

OWL is a W3C standard declarative language for describing formal ontologies in terms of properties, classes, and relationships among these structures on the web. OWL has been used to implement machine-readable models with the goal of enabling reasoning in a particular problem domain by more formally representing the semantics of the data than alternatives such as XML and JSON; the language has proven powerful, but esoteric and difficult to apply in practice. The main difficulty with practical application of OWL has been its “open world” assumption. Unlike most schemas in which a developer can assume the schema is the complete representation of a set of related classes; OWL allows developers to compose schemas from multiple sources online, so you never really know you have all the information about the objects it is describing. Complex syntax is a second issue only partially addressed by the still immature software libraries and tools for working with OWL.

SKOS is a W3C standard for defining online knowledge organization systems (KOS) such as thesauri, taxonomies, classification schemes and subject heading lists. Using SKOS, concepts can be identified using URIs, labeled with lexical strings in one or more natural languages, assigned notations (lexical codes), documented with various types of notes, linked to other concepts and organized into informal hierarchies and association networks, aggregated into concept schemes, grouped into labeled and/or ordered collections, and mapped to concepts in other schemes. SKOS structures are machine-readable and solve many practical problems of adding more semantic information to data managed by software applications, while being conceptually simpler to work with and develop software against than general OWL ontologies. SKOS is compatible with RDF / OWL, but constrains the full power and expressivity of the OWL language by defining a simple schema for KOS; see the SKOS Primer, particularly Chapters 1-2 for more info.

Here, we propose implementing a Synapse Ontology using SKOS. By using a W3C-supported standard, this should facilitate future mappings of information in Synapse to other systems using other ontologies (e.g. EFO). At the same time it provides more guidance for how to structure a practical ontology for the Synapse system that solves all our immediate use cases around standardizing annotations and searching for entities in the system.

Use Cases

- Constrain the set of values allowable for a particular property on a particular entity type to a set of values defined by the Synapse Ontology. E.g. the disease property must come from the set of terms that are narrower concepts than the “Disease” concept defined by the Synapse ontology. Note: one way we currently do this is via defining Enumerations in our object JSON schema, which result in the generation of Java objects containing an Enum of valid values. This is sufficient when the list of terms is relatively small and static. The ontology path is designed to support cases with 10K’s of terms allowed (e.g. any valid gene symbol), and to allow at least additive changes to the ontology without a Synapse build-deploy cycle. (Breaking changes to the Synapse ontology could also require a data migration step to make old data match the new ontology.)

- Constrain the set of values allowable for a particular clinical variable. Currently these are columns of a phenotype layer; we are in process of modeling these more explicitly as a Samples object within a dataset. In either case, we want to be able to define things like “the genes column must contain a gene as identified by the Gene Ontology”

- Use the ontology to help users find the entities or samples they are interested in. This could take the form of:

- type-ahead resolution (or other UI mechanism to limit input to a large set of strings) against all or part of the Synapse ontology

- facets linked to a portion of the Synapse ontology

- Use of synonyms and broader / narrower terms in the Synapse ontology to pre-process a search of free text and return more complete / relevant results.

- Long term only: Allow users annotating data to feed back proposed modifications to the Synapse ontology so that it improves over time.

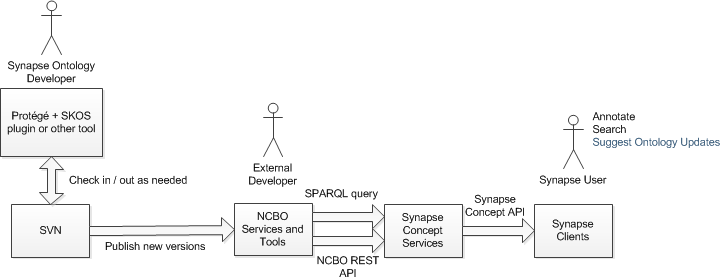

Summary of Design

- Ontology developers can use any tool that generates / reads SKOS to update the ontology. As examples Protégé contains a SKOS plug-in, and Google Refine has a way to translate between a spreadsheet format and SKOS. We propose that editing the Synapse ontology is a task done by a small number of in-house experts, who will check that ontology as SKOS into SVN, giving us versioning, merging and diff on our ontology, and setting us up for interoperability with other systems.

- We will publish Ontology in NCBO as new versions when we want to upgrade the Synapse ontology and make it available to external developers and the Synapse system. This makes the ontology available to both our own and external developers via the NCBO web services, including a SPARQL endpoint (general-purpose graph-query language). Our Google Refine project, for example, is hitting the public NCBO services to support term-reconciliation off any NCBO ontology.

- We will add a new set of Concept Services to Synapse to provide easy use of the Synapse ontology in Synapse clients. This will also insulate real-time users making these calls from a dependency on an external web service, reducing the risk of performance or availability problems. The service will not have any long-term persistence of the ontology, and is likely to work off of an in-memory cache of concepts generated from loading the Synapse ontology via the NCBO services.

- Synapse clients access Synapse Concept Services for information on Concepts.

Ontology Development

Open task: Convert Synapse ontology to make it SKOS compliant (Mike)

Open task: Evaluate use of Protégé SKOS plug-in to maintain and develop Synapse Ontology (Mike + Brig);

Open task: Evaluate Google Refine as SKOS editor (Mike)

Open task: Search for any other tools that might be easier to use (Mike)

Publishing Synapse Ontology to NCBO

Can continue to occur as it has been done so far.

Concept Services API

For simplicity I’ll start off defining these as Java methods and classes. Will convert to REST / JSON once we have the right set of services defined.

Finding Concepts

ConceptSummary[] getAllConcepts(String parentConceptURI, String startFilter, String language)

The intention of this service is to allow the clients to display lists of concepts in the ontology to users. We assume users only ever see the preferredLabel for concepts, while the system uses URIs to define concepts. Therefore, the return value is a set of ConceptSummary objects:

Class ConceptSummary

- String URI

- String PreferredLabel (skos:prefLabel)

Parameters

parentConceptURI – Constrains the call to return all concepts in the Synapse ontology that are skos:narrower than the concept defined by the parentConceptURI. If parentConceptURI is null, return all concepts in the ontology.

startFilter – Constrain the results returned to those where the preferred label (include synonyms?) starts with the startFilter. If null not used.

language – the language to return results in. If null, assume English. (Support not needed near term.)

Question: would this need to be paged? Or is returning 10K string pairs in JSON OK? What is biggest list of ConceptSummaries the client ever would need?

Question: any need for client to display related concepts in a tree view? If so, we are not returning enough here to do it. If we need it, we could add an integer searchDepth parameter to this service, allowing retrieval of direct children only when nodes in the tree were expanded. Might not need arbitrary depth, so alternative to int searchDepth would be Boolean flag giving all children or only direct children of a concept.

Question: does the client ever need the set of top concepts? Or do we always have things defined in our schema to be constrained to a parent concept that the client will have access to? If we need it, adding searchDepth=1 in conjunction with parentConceptURI=null would do it.

Getting more information about a concept

Concept getConcept(String conceptURI, String language)

Given a conceptURI, return an object that contains all the information that matches the skos:Concept class definition.

Class Concept

- String URI //Globally Unique ID for this concept

- String preferredLabel (skos:prefLabel)

- String[] synonyms (skos:altLabel) //Terms that mean exactly same thing as this concept

- String[] hiddenLabel (skos:hiddenLabel) //Probably not used

- String[] changeNotes (skos:changeNote) //Probably not used

- String[] definitions (skos:definition) //Text used in mouse-over term

- String[] editorialNotes (skos:editorialNote) //Probably not used

- String[] example (skos:example) //Probably not used

- String[] historyNote (skos:historyNote) //Probably not used

- String[] scopeNote (skos:scopeNote) //Probably not used

- String[] ancestor (skos:broaderTransitive) //URIs of all parents, in order

- String parent (skos:broader) //URI of direct parent

- String[] children (skos:narrower) //URIs of all direct children

- String[] decendents (skos:narrowerTransitive) //URIs of all descendent concepts

- String[] related (skos:related) //Terms with other relationships, not needed in first version of services

Parameters

conceptURI – the URI that uniquely and globally identifies this concept. If null error

language – the language encoding to return the concept in. If null assume English. Not needed in initial implementation.

Integration with Synapse Entity JSON Schema

We propose that a top level concept URI can be included in the JSON entity schema as an alternative to defining an enumeration in place. This uri can then be passed to the getAllConcepts service to return the set of permissible concepts allowed for the property. This keeps long enumerations out of the JSON schema and our repo services, and allows us to deploy ontology changes without changing running Synapse code.

We expect the predominant change to the Synapse ontology to be the addition of new concepts, or refinement of synonyms, definitions, or other information about concepts in an ontology. In this case no change to the data stored by the Synapse repository services is needed. For cases where concepts need to be deleted we propose the following lifecycle:

- We define a way to mark concepts in the ontology as deprecated. In this case, Synapse clients would still present information about the concept to users, suggest alternatives, and block creation of new annotations that mention the concept.

- Run a data migration process in which the deprecated concepts are replaced by newer alternatives. Depending on the nature of change to the ontology this may be either a manual or automated process.

- Drop the deprecated concepts completely from the ontology.

As this case is expected to be rare we may be able to defer support until we see a specific need for it approaching.

Implementation Notes

We assume the service is simply providing very rapid responses to the queries defined above out of an in-memory cache, and not having any persistent state. Options here to discuss:

Amazon ElastiCache seems build for this type of service, although could be overkill as I’m not sure we need distributed cache. Doing this in ElastiCache provide experience with this technology.

Adding on to Repository Services: Might be quick and dirty fastest way to get something up and running in short term.

In either of these cases we need something to tell the service to rebuild the cache. This is an administrative call made after we update NCBO with a new ontology. Basic idea would be that when service gets request to rebuild it builds a new cache in memory and then replaces the old cache with a new one, so we can switch to a new ontology with no downtime. This implies the max cache size is half the memory on the node though. Alternatively, could let the service go down for short period to rebuild to get to double the memory usage.