AWS Security Design

In preparation for putting Personally Identifiable Information (PII) on AWS such as genetic sequence data, let's research our options.

When we are done with our research, let's review our approach with Max Ramsay and Allan MacInnis of Amazon. (this meeting occurred on June 1, 2011)

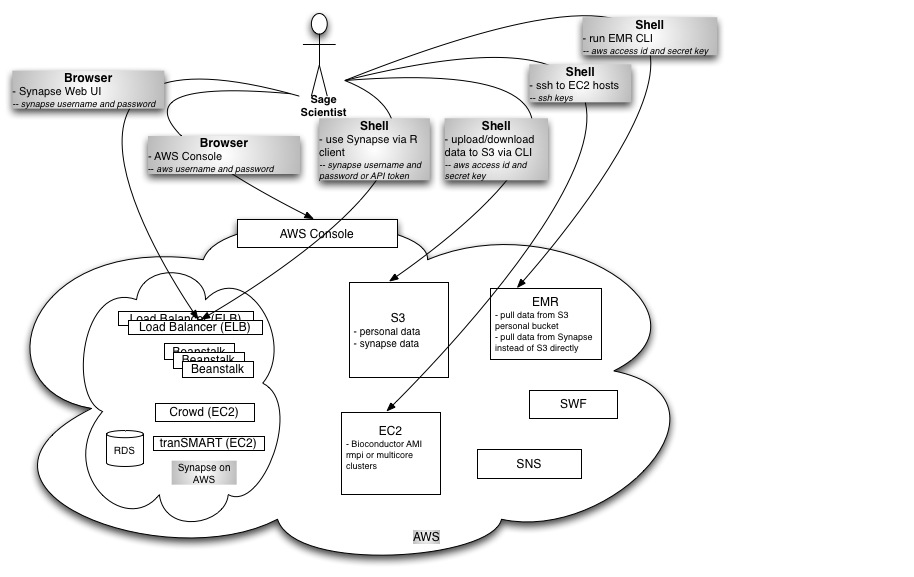

Resources Scientific Users Will Want to Access

Computation Resources

- Elastic MapReduce - for MapReduce-able type tasks

- via AWS Console

- via the EMR command line interface

- EC2 - for tasks not easily MapReduce-able

- Bioconductor AMI

- rmpi or multicore to EC2 slave hosts

Data Resources

- S3 - for source data and final results

- via AWS Console

- via s3-curl

- via Synapse R Client

- via Synapse UI

- EBS - for intermediate result data

- via EC2 hosts

Resources Synapse Devs and Admins Will Want to Access

All of the above plus:

- S3

- via BucketExplorer

- SNS

- SWF

- RDS MySQL

- RDS Oracle

- Beanstalk, therefore ELB

Current Plan

AWS accounts

For now Sage employees have their own AWS account under the consolidated bill. Synapse Devs and Admins share an account and password for production resources.

Later this year we can switch everyone to IAM when it supports Elastic MapReduce and Beanstalk via the AWS console. This is good because:

- IT folks will be able to get into their accounts, if needed

- IT folks can reset passwords and credentials, as needed

- IT folks can limit resources to which users have access, if needed

Approaches to secure AWS credentials

For the scientific staff

TODO convey this information to all employees with AWS accounts under the consolidated bill.

- All users put their AWS credentials and ssh keys in their home directory on the shared unix servers.

- The platform team will install and maintain AWS command line interface tools on our shared unix servers.

- Users should be discouraged from installing AWS command line interface tools on their laptops. Instead ssh to shared servers and use them from there. (VPN to the Hutch if at home.)

- Credentials and passwords are not to be stored in any source control systems.

- Discourage storage of credentials on laptops (but disks are encrypted so

its not a big deal). - Discourage storage of credentials on EC2 images. Work with users on a case-by-case basis if they do have a need for this.

Specifically for the Platform team:

- No passwords or AWS credentials should get checked in to SVN.

- Use Beanstalk properties instead to pass stuff to our services.

- Use properties files (not checked into SVN) to pass credentials to the R/Python/Java Synapse clients. (We are currently passing them as command line parameters, but a properties file would be better.)

- For integration tests

- Pass Java properties in the maven build command.

- Caveat: we do have one test MySQL password checked in. Its best to avoid this whenever possible though to instill good habits.

- If you do have a test account or a test password you want check in, use stuff like

username:integration.test@sagebase.organdpassword:ThisIsAFakePasswordto make it clear that they are junk.

- Consider the integration test environment as unsafe. No protected data should reside there. Atlassian has full access to those hosts. (Not that they are evil, but if they have breach, we have a breach.)

Deferred ideas:

- rotation of AWS password

- rotation of AWS credentials and ssh keys

- multi-factor authentication

Approaches to secure data in transit

- Since we are only using private S3 buckets, all communication is over https

- All ssh (port 22) access will be restricted to the Fred Hutch CIDR 140.107.0.0/16

- No Amazon firewall (a.k.a. security groups) with any rules for 0.0.0.0/0 with the exception of truly public stuff such as the Synapse services.

- TODO help all current AWS users to configure their EC2 and EMR firewall rules

- All Synapse services have been configured for HTTPS

- TODO redirect HTTP connections to HTTPS PLFM-181

- The Synapse MySQL db will be restricted to only allow external access via encrypted connections originating from the Hutch CIDR PLFM-100

- Once data is in the cloud, the AWS network fabric does not allow other EC2 hosts to sniff any traffic not intended for them.

Deferred ideas:

- VPC won't work for us yet since it does not support EMR, Beanstalk, RDS, ...

Approaches to secure data at rest

- TODO make sure all current AWS users are only using private S3 buckets

- Encourage all Sage scientists interact with S3 via S3 urls vended from Synapse so that IT can keep the data organized and secured properly

- TODO Synapse needs more work for this to be feasible

- TODO set up monitoring of the S3 audit logs to look for intrusions

Deferred ideas:

- store data encrypted

Approaches to secure applications (Synapse)

- TODO finish our implementation of authentication and authorization

- Have a platform team meeting about security and talk about everything on this wiki including: (meeting held on June 15, 2011)

- SQL injection

- Cross-site scripting

- Cross-site request forgery

- Update AMIs used for services when Amazon releases updates to their Linux AMI

- All Synapse servers will have iptables configured to restrict outgoing connections IT-61

- Update the MySQL accounts to have a service user that is different than the root user IT-60

Amazon systems for security

We may use a combination of these as needed:

Default Stuff

http://aws.amazon.com/security/

e.g., the firewall settings via the AWS console for EC2 hosts and EMR hosts

e.g., ssh-ing to EC2 hosts provides encryption

e.g., HTTPS to S3 provides encryption

e.g., all CLI's encrypt their traffic

VPC

Hopes:

- Will this make firewall configuration easier?

- Can we prevent users from changing firewall settings?

Summary:

- We cannot use VPC for the Synapse stack yet because it does not support beanstalk or RDS.

- We cannot use VPC for scientific computing because it does not support EMR or cluster instances.

Service limitations with the current implementation of Amazon VPC:

- Amazon VPC is currently available only the Amazon EC2 US-East (Northern Virginia) Region, or in the Amazon EC2 EU-West (Ireland) Region, and only in a single Availability Zone.

- You can have one VPC per account per Region.

- You can assign one IP address range to your VPC.

- Once you create a VPC or subnet, you can't change its IP address range.

- If you plan to have a VPN connection to your VPC, then you can have one VPN gateway, one customer gateway, and one VPN connection per AWS account per Region.

- You can't use either broadcast or multicast within your VPC.

- Amazon EC2 Spot Instances, Cluster Instances, and Micro Instances do not work with a VPC.

- Amazon Elastic Block Store (EBS), Amazon CloudWatch, and Auto Scaling are available with all your instances in a VPC.

- AWS Elastic Beanstalk, Elastic Load Balancing, Amazon Elastic MapReduce, Amazon Relational Database Service (Amazon RDS), and Amazon Route 53 are not available with your instances in a VPC.

- Amazon DevPay paid AMIs do not work with a VPC.

- VPC and S3 does work fine now.

- VPC does not work with Elastic MapReduce

- VPC does not work with BeanStalk

IAM

Can we easily give scientists access to all the stuff they do need without making it complicated?

- IAM works for EMR CLI but not AWS console yet (but this will happen soon though)

- IAM does not help much with EC2 (but this will happen this year)

Multi-Factor Authentication

Summary:

- This seems not too useful if a person who has your access id and secret key can call any API via command line interfaces.

How does this work with the CLIs?

http://aws.amazon.com/mfa/faqs/

Q. Does AWS MFA affect how I access AWS Service APIs?

Only for S3 PUT Bucket versioning, GET Bucket versioning and DELETE Object APIs, which enables you to require that deleting or changing the versioning state of your bucket use an additional authentication code. For more information see the S3 documentation discussing Configuring a Bucket with MFA Delete in more detail. AWS MFA does not currently change the way you access AWS service APIs for any of the other services.

Cloud Formation

This doesn't help with security, it just makes setting up synapse stacks repeatable.