S3 Locations Design v2

Problems with the current S3 design

- There is a couple second delay between creating an IAM user and being able to use it on S3. Therefore new Synapse users whose first download is via the R client get an exception, when they try again a few second later it is fine.

- For files larger than 5GB, Multipart Upload is required. (Need confirmation from AWS but) It appears that you must have an accessId and secretKey to perform the multipart upload. We cannot let client have the IAM credentials we create for them because those credentials have permission to access all objects in the S3 bucket due to scaling limitations with IAM. (Note that federated users and security tokens did not launch till August 2011)

Solution

Repository Service Changes

- Switch from IAM users to federated users and security tokens so that we can sufficiently limit the permissions afforded by those credentials

- add a new request url /<LocationableEntityType>/<id>/s3Token to get a security token for use in an upload

- complete the work to collapse layer and location

- move md5 and content type from LocationData to Layer (all LocationDatas for a Layer should be holding files that match the md5)

- turn on auto-versioning when the md5 changes for any locationable object

Client Changes

- Upgrade the web client to add one extra header containing the security token when performing downloads from S3 turns out this isn't necessary, presigned urls work for federated users

- Upgrade the java client to do multipart uploads and downloads by default

- Modify the R client to use the Java client for uploads/downloads

- Note no special client is needed for multipart downloads because it is part of the HTTP protocol via the Range header and not an extension to the S3 API

Data Migration Plan

Incremental Switch

- When loading data for locationable entities, first look in the new spot to see if location data is there, if not, look in the old spot

- When storing data for locationable entities, clients use the new API

- Run a background task to walk all locationable entities, download then upload the data (which has the side effect of versioning the entity)

- Pros:

- presumably no outage needed

- Cons:

- confusing, more likely to make errors and corrupt data

- we may never retire the old API

The Big Switch

- copy all S3 data to new S3 bucket so that the S3 urls have the correct entity prefix

- copy values in Location entities to Layer entities with new S3 paths

- nuke all Location entities

- nuke code for Location entities

- Pros:

- very safe, we can keep the old S3 bucket and database backup for a long time

- Cons:

- might be a long outage

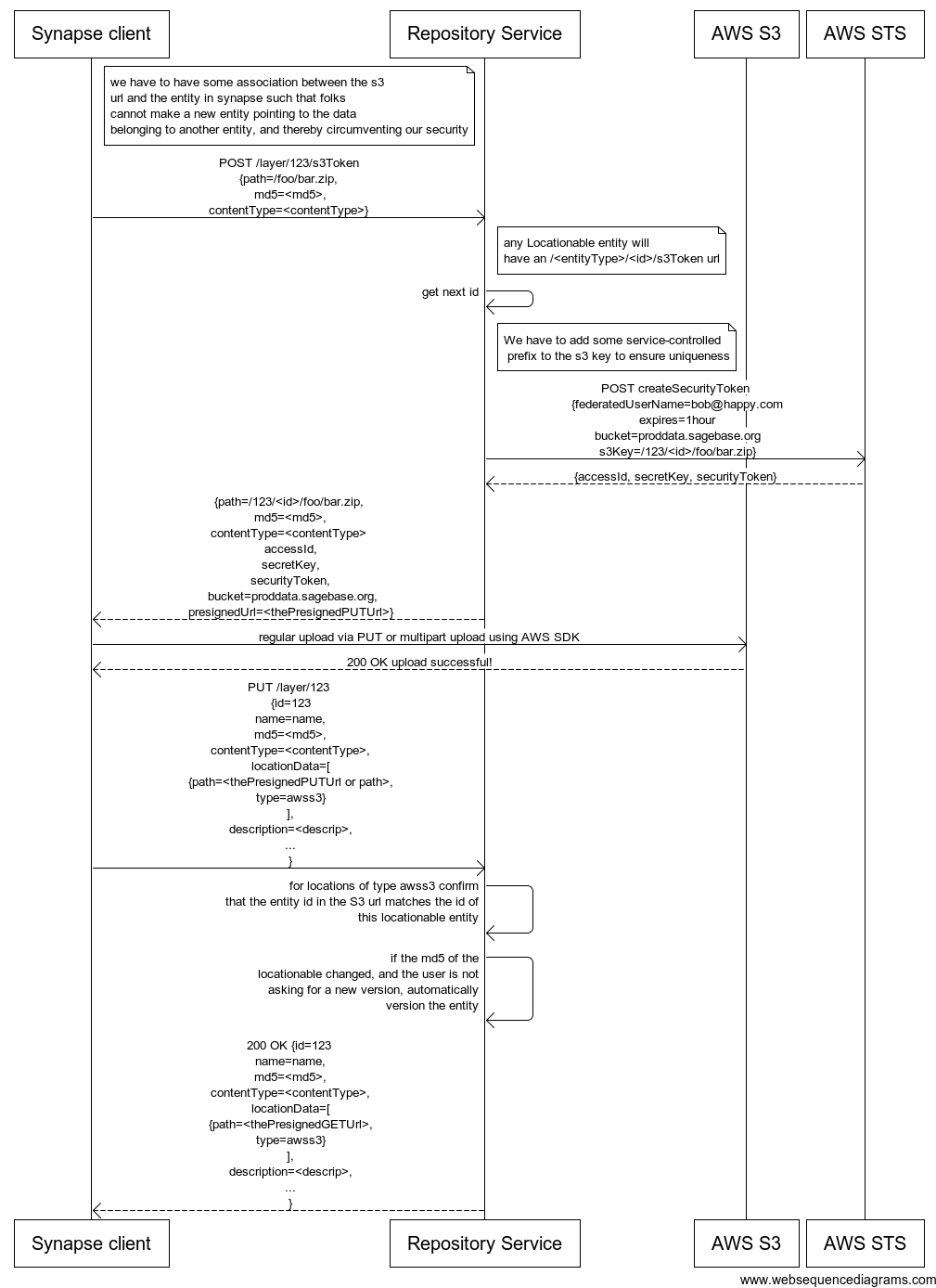

Sequence Diagram for Design

Alternate Approaches

Skip Federated Users and just add a tracking ID to the presigned urls for audit purposes

I've confirmed that you can include a tracking querystring along with your signedURL. However, querystring cannot be part of the signed message. In other words, when you sign the URL, the string to sign should only be objectkey+S3bucket+expiretime. Example URL:

http:/s3.amazonaws.com/testbucket/foo.obj?Expires=X&AWSAccessKeyId=XXXXX&Signature=XXX&ID=YYYYYY.

In this example ID=YYY is your unique tracking id. The only caveat with this option is that users can remove the tracking ID and still be able to upload/download from S3. Not sure if that's going to be a concern.

We did not go with this because since users can strip off the tracking tag and still use the url, it doesn't meet our bar for auditing per Mike.

Use a different library than the AWS Java SDK

http://jets3t.s3.amazonaws.com/index.htmlhttp://jets3t.s3.amazonaws.com/toolkit/code-samples.html

We did not pull this in because we already have the AWS Java SDK as a dependencies and it is meeting our needs right now. If later on we also support other cloud storage vendors, we might switch to this library since it works with several.