Data Sources

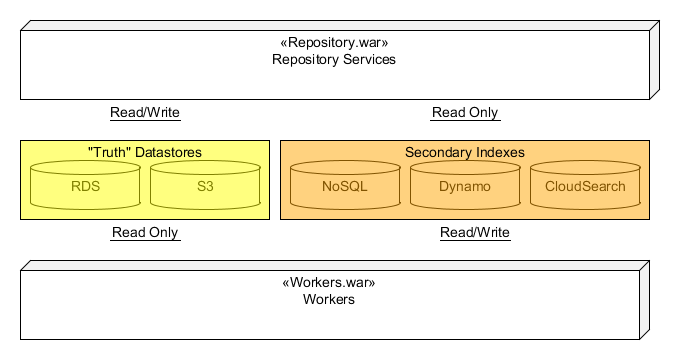

At the highest level, the Synapse REST API is supported from a single war file deployed to Amazon's Elastic Beanstalk call the repository.war. While the repository services reads data from many sources including RDS (MySQL), S3, CloudSearch, Dynamo, SQS, it will only write to RDS and S3.

Any REST call that writes data will always be in a single database transaction. This includes writes where data is stored in S3. For such cases, data is first written to S3 using a key containing a UUID. The key is then stored in RDS as part of the single transaction. This means any S3 data or RDS data will always have read/write consistency. All other data sources will be eventual consistent.

Asynchronous processing

As mentioned above, the repository services only writes to RDS and S3. All other data-sources (Dynamo, CloudSearch, etc.) are secondary and serve as indexes for quick data retrieval for things such as ad hock queries and search. These secondary indexes are populated by a secondary application called "workers". The details of these worker will be covered more detail later, but for now, think of the workers as a suite of processes that respond to messages generated by the repository services.

Message generation

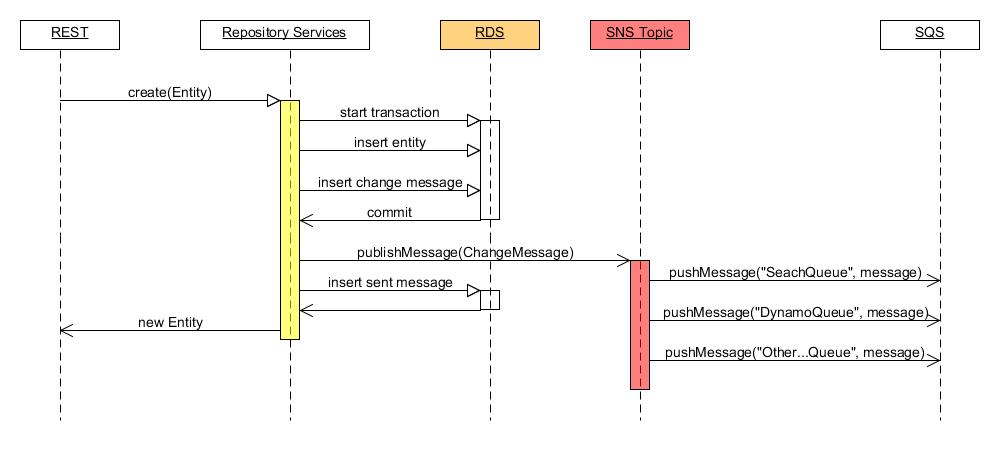

Anytime data is written to Synapse through the repository services, a message is generated and sent to an Amazon SNS Topic. The topic acts as a message syndication system, that pushes a copy of the message to all Amazon SQS queues that are registered with the topic. Each queue has a dedicated worker process messages pushed to the queue. The following sequence diagram shows how the repository service generates a message in response to a write:

In the Above example, a create entity call is made, resulting in the start of a database transaction. The entity is then inserted into RDS. A change message containing meta data about this new entity (including ID and Etag) is also inserted into RDS as part of this initial transaction to the changes table. The change message object is also bound to the transaction in an in-memory queue. When this initial transaction is committed, a transaction listener is notified, and all messages bound to that transaction are sent to an SNS Topic.

Message Generation Fail-safe

Under adverse conditions it is possible that an RDS write is committed, yet a message is not sent to the topic. For example, the repository services instance is shut down after a commit but before the messages can be sent to the topic. A special worker is used to detect and recover from such failures. This worker scans for deltas between the changes table and the sent message table. Anytime a discrepancy is found, the worker will attempt to re-send the failed message(s). This worker also plays in important roll in stack migration which will be covered in more detail in a later section.

Message Guarantees:

- A Change Message is recorded with the original transaction, if the write is committed then, so is the record of the change in the change table.

- Messages are not published until after the transaction commits, so race conditions on message processing is not possible.

- Under normal condition messages are sent immediately.

- A system is in place to detect and re-send any lost message.

Message processing

Each queue has its own class of dedicated worker that pops messages from the queue and writes data to a secondary data source. These workers are all bundled into a special application called "workers". The works application is deployed to Elastic Beanstalk in the workers.war file. Unlike the repository services, the workers application does not actually handle any web requests (other than administration support). Instead we are utilizing the "elastic" properties of Beanstalk, to manage a cluster of workers. This includes automatically scaling up and down, multi-zone deployment and failure recovery.

Each worker is run off its own Quartz Trigger as part of a larger Quartz scheduler. Worker concurrency across the cluster is controlled using a RDS backed "Semaphore". Each worker is assigned its own semaphore key, the maximum number of concurrent process across the entire cluster, and a maximum run-time (timeout). Some classes of workers must be run one-at-a-time while other are capable of running multiple instances in parallel.

The resilience of these works is provided by a combination of the features of Amazon's SQS, Elastic Beanstalk, and Quartz.

Repository Layers

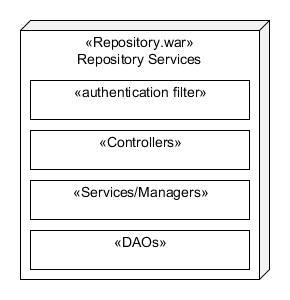

Each repository services is composed of at least four distinct layers:

Authentication Filter

The Authentication filter is a servlet filter that is applied to most repository service URLs. The filters only function is to authenticate the caller. There are several mechanism with which a user may authenticate including a Session Token added to the header of the request or signing the request using their API key. For more information about authentication see: Authentication Controller. Once the filter authenticates a user, the user's ID is passed along with the request.

Controllers

The controller's layer simply maps all of the components of an HTTP request into a Java method call. This includes the URL path, query parameters, request and response body marshaling, error handling and other response codes. Each controller also groups calls in the same category into a single file. The javadocs of each controller also serves as the source for the REST API documentation auto-generation. Each controller is a very thin layer that depends on the service layer.

All request and response bodies for the repository services have a JSON format. The controller layer is where all Plain Old Java Objects (POJOs) are marshaled to/from JSON. Every request and response objects is defined by a JSON schema, which is then used to auto-generate a POJO. For more information on this process see the /wiki/spaces/JSTP/pages/7867487 and the /wiki/spaces/JSTP/overview project.

Services/Manager

Ideally the services/managers layer encapsulates all repository services "business logic". This business logic includes any object composition/decomposition. This layer is also where all authorization rules are applied. If the caller is not allowed to perform some action an UnauthorizedException will be raised. Managers interact with datastore such a RDS using a DAO, which will be covered in more details in the next section.

DAO

A Data Access Object (DAO) serves as an abstraction to a datastore. For example, WikiPages are stored in a RDS MySQL database. The WikiPageDAO serves an an abstraction for all calls to the database. This forces the separation of the "business logic" from the details of Creating, Reading, Updating, and Deleteing (CRUD) objects from a datastore.

One of the major jobs of a DAO is the translation between Data Transfer Objects (DTO) which are the same objects exposed in the public API, to Database Objects (DBO). This translation means DTOs and DBOs can be loosely coupled. So a database schema change might not result in an API change and vice versa. This also provides the means to both normalize and de-normalize relational database data. For example, an WikiPage (a DTO) object provided by an API caller will be translated into four normalized relational database tables each with a corresponding DBO.

Acronym summary:

- DTO - Data Transfer Object is same object end-users see/use when making API calls.

- DBO - Database Object is a POJO that maps directly to table. For every column of the table there will be a matching field in the corresponding DBO.

- DAO - Data Access Object is the abstraction that translates to DTOs into DBOs directly performs all datasource CRUD.

- CRUD - Create, Read, Update, and Delete.