Note that we went with pre-signed urls and plan to switch to IAM tokens instead of IAM users when that feature is launched.

Dataset Hosting Design

| Table of Contents |

|---|

...

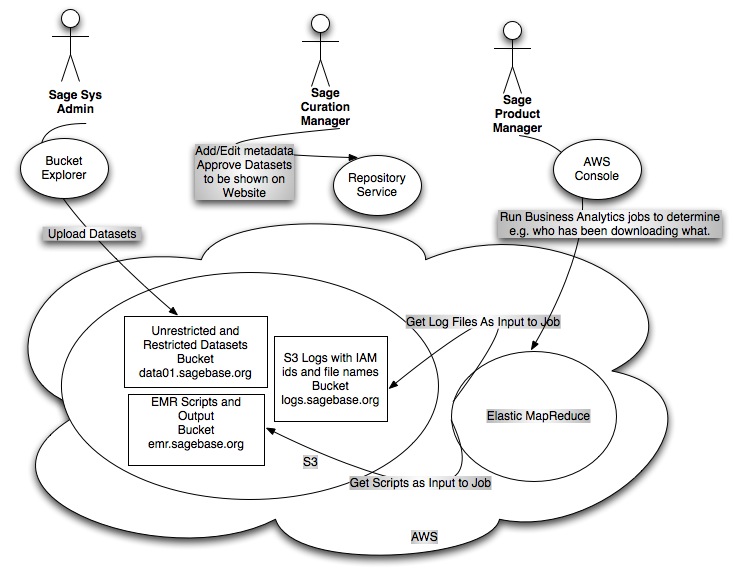

- All data is stored on S3 as our hosting partner.

- All data will be served over SSL/HTTPS.

- We will have one Identity and Access Management (IAM) group for read-only access all datasets.

- We will generate and store IAM credentials for each user that signs any EULA for any dataset. The user will be added to the read-only access IAM group.

- We never give those IAM credentials out, we only use them to generate pre-signed S3 URLs with an expiry time of an hour or so.

- With these pre-signed URLs, users are able to download data directly from S3 using the Web UI, the R client, or even something simple like curl.

- Our Crowd groups are more granular and tell which which users are allowed to have pre-signed URLs for which datasets.

- The use of IAM allows us to merely track in the S3 access logs who has downloaded what.

- Users can download this files to EC2 hosts (no bandwidth charges for Sage) or to external locations (Sage pays bandwidth charges).

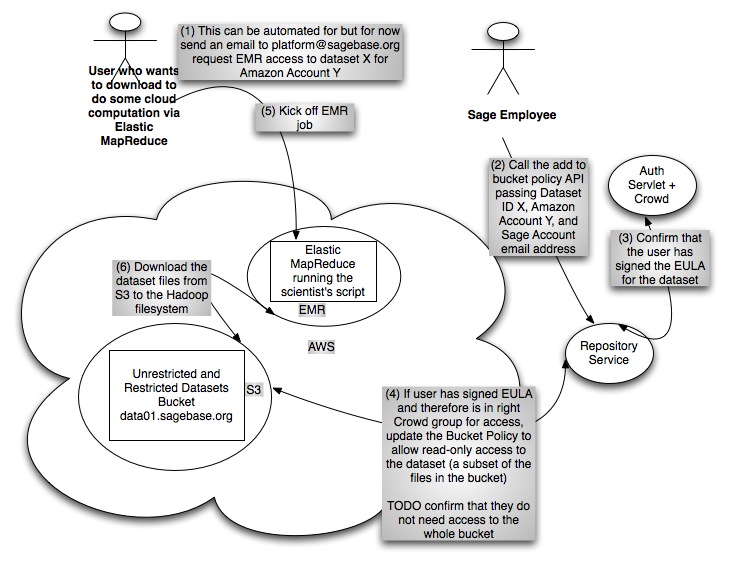

- For users who want to utilize Elastic MapReduce, which does not currently support IAM, we will add them to the Bucket Policy for the dataset bucket with read-only access.

Sage Employee Use Case

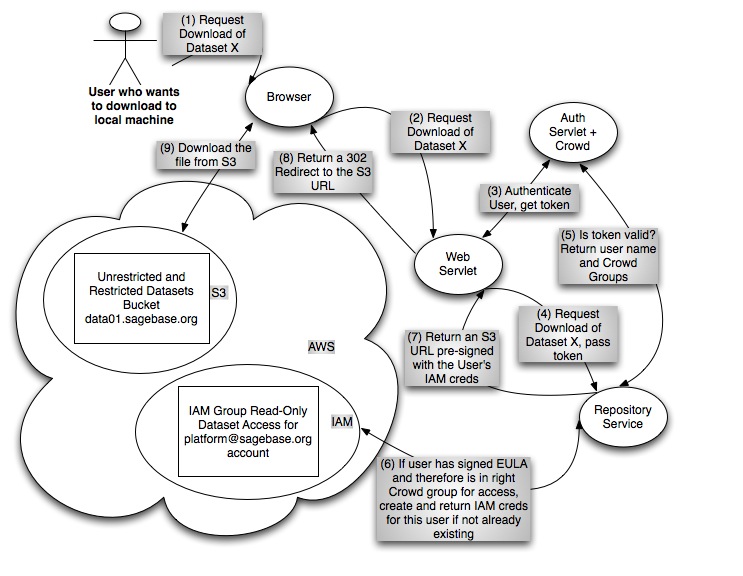

Download Use Case

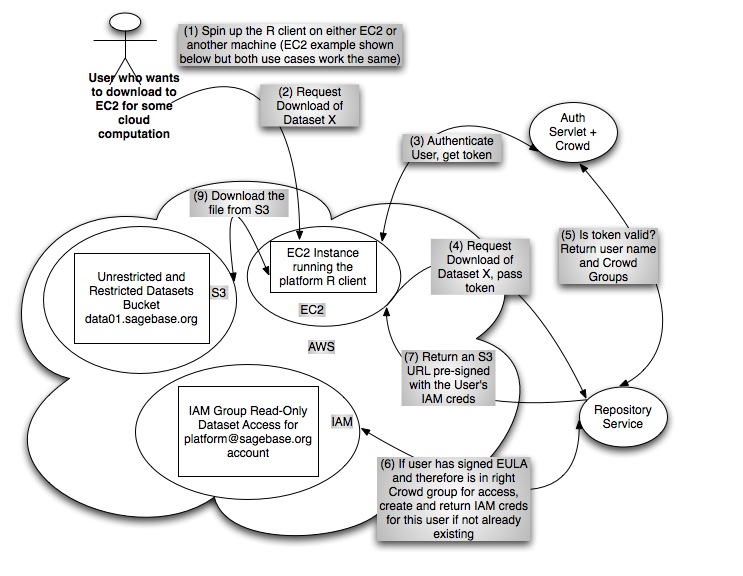

EC2 Cloud Compute Use Case

Elastic MapReduce Use Case

Assumptions

Where

Assume that the initial cloud we target is AWS but we plan to support additional clouds in the future.

...

- Best Effort Server Log Delivery:

- The server access logging feature is designed for best effort. You can expect that most requests against a bucket that is properly configured for logging will result in a delivered log record, and that most log records will be delivered within a few hours of the time that they were recorded.

- However, the server logging feature is offered on a best-effort basis. The completeness and timeliness of server logging is not guaranteed. The log record for a particular request might be delivered long after the request was actually processed, or it might not be delivered at all. The purpose of server logs is to give the bucket owner an idea of the nature of traffic against his or her bucket. It is not meant to be a complete accounting of all requests.

- [Usage Report Consistency

[http://docs.amazonwebservices.com/AmazonS3/2006-03-01/dev/index.html?ServerLogs.html

] ] It follows from the best-effort nature of the server logging feature that the usage reports available at the AWS portal might include usage that does not correspond to any request in a delivered server log.

- I have it on good authority that the S3 logs are accurate but delievery can be delayed now and then

- Log format details

- AWS Credentials Primer

Open Questions:

- can we use the canonical user id to know who the user is if they have

previously given us their AWS account id? No, but we can ask them to provide

their canonical id to us. - if we stick our own query params on the S3 URL will they show up in the S3 log?

Options to Restrict Access to S3

S3 Pre-Signed URLs for Private Content

"Query String Request Authentication Alternative: You can authenticate certain types of requests by passing the required information as query-string parameters instead of using the Authorization HTTP header. This is useful for enabling direct third-party browser access to your private Amazon S3 data, without proxying the request. The idea is to construct a "pre-signed" request and encode it as a URL that an end-user's browser can retrieve. Additionally, you can limit a pre-signed request by specifying an expiration time."

Scenario:

- See above details about the general S3 scenario.

- This would not be compatible with Elastic MapReduce. Users would have to download files to an EC2 host and then store them in their own bucket in S3.

- The platform grants access to the data by creating pre-signed S3 URLs to Sage's private S3 files. These URLs are created on demand and have a short expiry time.

- Users will not need AWS accounts.

- The platform has a custom solution for collecting payment from users to pay for outbound bandwidth when downloading outside the cloud.

Tech Details:

- Repository Service: the service has an API that vends pre-signed S3 URLs for data layer files. Users are authenticated and authorized by the service prior to the service returning a download URL for the layer. The default URL expiry time will be one minute.

- Web Client: When the user clicks on the download button, the web servlet sends a request to the the repository service which constructs and returns a pre-signed S3 URL with an expiry time of one minute. The web servlet returns this URL to the browser as the location value of a 302 redirect. The browser begins download from S3 immediately.

- R Client: When the user issues the download command at the R prompt, the R client sends a request to the the repository service which constructs and returns a pre-signed S3 URL with an expiry time of one minute. The R client uses the returned URL to begin to download the content from S3 immediately.

Pros:

- S3 takes care of serving the files (once access is granted) so we don't have to run a proxy fleet.

- Simple! These can be used for both the download use case and the cloud computation use cases.

- Scalable

- We control duration of the expiry window.

Cons:

- Potential for URL reuse: When the url has not yet expired, it is possible for others to use that same URL to download files.

- For example, if someone requests a download URL from the repository service (and remember that the repository service confirms that the user is authenticated and authorized before handing out that URL) and then that person emails the URL to his team, his whole team could start downloading the data layer as long as they all kick off their downloads within that one minute window of time.

- Of course, they could also download the data locally and then let their whole team have access to it.

- This isn't much of a concern for unrestricted data because its actually easier to just make a new account on the system with a new email address.

- Potential for user confusion: If a user gets her download url, and somehow does not use it right away, she'll need to reload the web page to get another or re-do the R prompt command to get a fresh URL.

- This isn't much of a concern if we ensure that the workflow of the clients (web or R) is to use the URL upon receipt.

- Tracking downloads for charge-backs: We know when we vend a URL but it may not be possible to know whether that URL was actually used (no fee) or used more than once (multiple fees).

Open Questions:

- Does this work with the new support for partial downloads for gigantic files? Currently assuming yes, and that the repository service would need to give out the URL a few times during the duration of the download (re-request the URL for each download chunk)

- Does this work with torrent-style access? Currently assuming no.

- Can we limit S3 access to HTTPS only? Currently assuming yes.

Resources:

- http://docs.amazonwebservices.com/AmazonS3/latest/dev/index.html?S3_QSAuth.html

- http://docs.amazonwebservices.com/AmazonS3/latest/dev/index.html?RESTAuthentication.html

- http://docs.amazonwebservices.Custom Access Log Information "You can include custom information to be stored in the access log record for a request by adding a custom query-string parameter to the URL for the request. Amazon S3 will ignore query-string parameters that begin with "x-", but will include those parameters in the access log record for the request, as part of the Request-URI field of the log record. For example, a GET request for "s3.amazonaws.com/mybucket/photos/2006/08/puppy.jpg?x-user=johndoe" will work the same as the same request for "s3.amazonaws.com/mybucket/photos/2006/08/puppy.jpg", except that the "x-user=johndoe" string will be included in the Request-URI field for the associated log record. This functionality is available in the REST interface only."

Open Questions:

- can we use the canonical user id to know who the user is if they have

previously given us their AWS account id? No, but we can ask them to provide

their canonical id to us. - if we stick our own query params on the S3 URL will they show up in the S3 log? - YES see above naming convention

Options to Restrict Access to S3

S3 Pre-Signed URLs for Private Content

"Query String Request Authentication Alternative: You can authenticate certain types of requests by passing the required information as query-string parameters instead of using the Authorization HTTP header. This is useful for enabling direct third-party browser access to your private Amazon S3 data, without proxying the request. The idea is to construct a "pre-signed" request and encode it as a URL that an end-user's browser can retrieve. Additionally, you can limit a pre-signed request by specifying an expiration time."

Scenario:

- See above details about the general S3 scenario.

- This would not be compatible with Elastic MapReduce. Users would have to download files to an EC2 host and then store them in their own bucket in S3.

- The platform grants access to the data by creating pre-signed S3 URLs to Sage's private S3 files. These URLs are created on demand and have a short expiry time.

- Users will not need AWS accounts.

- The platform has a custom solution for collecting payment from users to pay for outbound bandwidth when downloading outside the cloud.

Tech Details:

- Repository Service: the service has an API that vends pre-signed S3 URLs for data layer files. Users are authenticated and authorized by the service prior to the service returning a download URL for the layer. The default URL expiry time will be one minute.

- Web Client: When the user clicks on the download button, the web servlet sends a request to the the repository service which constructs and returns a pre-signed S3 URL with an expiry time of one minute. The web servlet returns this URL to the browser as the location value of a 302 redirect. The browser begins download from S3 immediately.

- R Client: When the user issues the download command at the R prompt, the R client sends a request to the the repository service which constructs and returns a pre-signed S3 URL with an expiry time of one minute. The R client uses the returned URL to begin to download the content from S3 immediately.

Pros:

- S3 takes care of serving the files (once access is granted) so we don't have to run a proxy fleet.

- Simple! These can be used for both the download use case and the cloud computation use cases.

- Scalable

- We control duration of the expiry window.

Cons:

- Potential for URL reuse: When the url has not yet expired, it is possible for others to use that same URL to download files.

- For example, if someone requests a download URL from the repository service (and remember that the repository service confirms that the user is authenticated and authorized before handing out that URL) and then that person emails the URL to his team, his whole team could start downloading the data layer as long as they all kick off their downloads within that one minute window of time.

- Of course, they could also download the data locally and then let their whole team have access to it.

- This isn't much of a concern for unrestricted data because its actually easier to just make a new account on the system with a new email address.

- Potential for user confusion: If a user gets her download url, and somehow does not use it right away, she'll need to reload the web page to get another or re-do the R prompt command to get a fresh URL.

- This isn't much of a concern if we ensure that the workflow of the clients (web or R) is to use the URL upon receipt.

- Tracking downloads for charge-backs: We know when we vend a URL but it may not be possible to know whether that URL was actually used (no fee) or used more than once (multiple fees).

Open Questions:

- Does this work with the new support for partial downloads for gigantic files? Currently assuming yes, and that the repository service would need to give out the URL a few times during the duration of the download (re-request the URL for each download chunk)

- Does this work with torrent-style access? Currently assuming no.

- Can we limit S3 access to HTTPS only? Currently assuming yes.

Resources:

- http://docs.amazonwebservices.com/AmazonS3/latest/dev/index.html?RESTAuthenticationS3_QSAuth.html#RESTAuthenticationQueryStringAuth

...

- html

- http://docs.amazonwebservices.com/AmazonS3/latest/dev/index.html?RESTAuthentication.html

- http://docs.amazonwebservices.com/AmazonS3/latest/dev/index.html?RESTAuthentication.html#RESTAuthenticationQueryStringAuth

S3 Bucket Policies

This is the newer mechanism from AWS for access control. We can add AWS accounts and/or IP address masks and other conditions to the policy.

...

- The repository service grants access by adding the user to the correct Crowd and IAM groups for the desired dataset. The Crowd groups are "the truth" and the IAM groups are a mirror of that.At access grant time, we also have to determine and store the mapping between IAM id and canonical user id. The S3 team has a fix for this on their roadmap but in the interim we have to make a request for a particular known URL as that new user and then retreive the canonical id from the S3 log and store it in our system.mirror of that.

- Log data

- For IAM user

nicole.deflauxmy identity is recorded in the log asarn:aws:iam::325565585839:user/nicole.deflaux - Not that IAM usernames can be email addresses so that we can easily reconcile them with their email addresses registered with the platform -> because we will use the email they gave us when we create their IAM user.

- Here is what a full log entry looks like:

Code Block d9df08ac799f2859d42a588b415111314cf66d0ffd072195f33b921db966b440 data01.sagebase.org [18/Feb/2011:19:32:56 +0000] 140.107.149.246 arn:aws:iam::325565585839:user/nicole.deflaux C6736AEED375F69E REST.GET.OBJECT human_liver_cohort/readme.txt "GET /data01.sagebase.org/human_liver_cohort/readme.txt?AWSAccessKeyId=AKIAJBHAI75QI6ANOM4Q&Expires=1298057875&Signature=XAEaaPtHUZPBEtH5SaWYPMUptw4%3D&x-amz-security-token=AQIGQXBwVGtupqEus842na80zMbEFbfpPhOsIic7z1ghm0Umjd8kybj4eaOtBCKlwVHMXi2SuasIKxwYljjDA95O%2BfZb5uF7ku4crE6OObz8d/ev7ArPime2G/a5nXRq56Jx2hAt8NDDbhnE8JqyOnKn%2BN308wx2Ud3Q2R3rSqK6t%2Bq/l0UAkhBFNM1gvjR%2BoPGYBV9Jspwfp8ww8CuZVH1Y2P2iid6ZS93K02sbGvQnhU7eCGhorhMI5kxOqy7bTbzvl2HML7zQphXRIa1wqrRSD/sBLfpK5x6A%2BcQnLrgO6FtWJMDo5rTmgEPo6esNIivWnaiI6BvPddLlBMZVtmcx39/cOBbrfK3v0vHmYb3oftseacjvBD/wfyigB5wbSgRUYNhbUu1V HTTP/1.1" 200 - 6300 6300 45 45 "https://s3-console-us-standard.console.aws.amazon.com/GetResource/Console.html?&lpuid=AIDAJDFZWQHFC725MCNDW&lpfn=nicole.deflaux&lpgn=325565585839&lpas=ACTIVE&lpiamu=t&lpak=AKIAJBHAI75QI6ANOM4Q&lpst=AQIGQXBwVGtupqEus842na80zMbEFbfpPhOsIic7z1ghm0Umjd8kybj4eaOtBCKlwVHMXi2SuasIKxwYljjDA95O%2BfZb5uF7ku4crE6OObz8d%2Fev7ArPime2G%2Fa5nXRq56Jx2hAt8NDDbhnE8JqyOnKn%2BN308wx2Ud3Q2R3rSqK6t%2Bq%2Fl0UAkhBFNM1gvjR%2BoPGYBV9Jspwfp8ww8CuZVH1Y2P2iid6ZS93K02sbGvQnhU7eCGhorhMI5kxOqy7bTbzvl2HML7zQphXRIa1wqrRSD%2FsBLfpK5x6A%2BcQnLrgO6FtWJMDo5rTmgEPo6esNIivWnaiI6BvPddLlBMZVtmcx39%2FcOBbrfK3v0vHmYb3oftseacjvBD%2FwfyigB5wbSgRUYNhbUu1V&lpts=1298051257585" "Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_6; en-US) AppleWebKit/534.13 (KHTML, like Gecko) Chrome/9.0.597.102 Safari/534.13" -

- For IAM user

- The repository service vends credentials for the users' use.

- Users will need to sign those S3 URLs with their AWS credentials using the usual toolkit provided by Amazon.

- One example tool provided by Amazon is s3curl

- I'm sure we can also get the R client to also do the signing. We can essential hide the fact that users have their own credentials just for this since the R client can communicate with the repository service and cache credentials in memory.

- We may be able to find a JavaScript library to do the signing as well for Web client use cases. If not, we could proxy download requests. That solution is described in its own section below.

...

The Pacific Northwest Gigapop is the point of presence for the Internet2/Abilene network in the Pacific Northwest. The PNWGP is connected to the Abilene backbone via a 10 GbE link. In turn, the Abilene Seattle node is connected via OC-192 192 links to both Sunnyvale, California and Denver, Colorado.

PNWPG offers two types of Internet2/Abilene interconnects: Internet2/Abilene transit services and Internet2/Abilene peering at Pacific Wave International Peering Exchange. See Participant Services for more information.

...