...

Prior to switching to a VPC, each EC2 and Database in the Synapse stack was issued a public IP address and was visible to the public internet. This means the While each machine was still protected by a firewall (one or more Security Groups), SSH keys and passwords, were the only protections from hackersit was theoretically possible for hackers to probe the public visible machines for weaknesses. By deploying machines into switching to a VPC, it becomes is possible to completely hide the EC2s and Databases instances from the public internet.

...

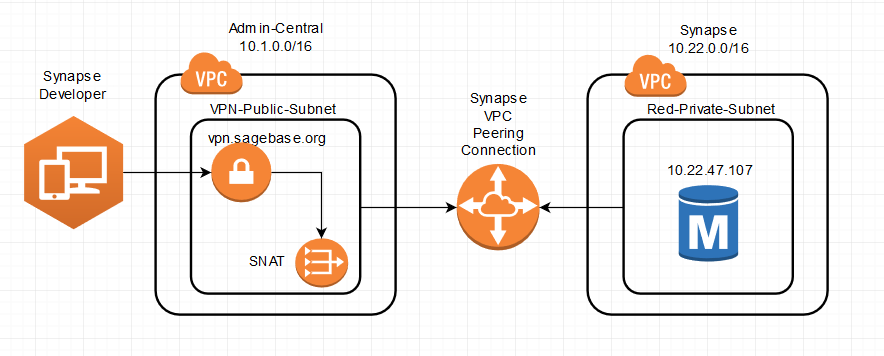

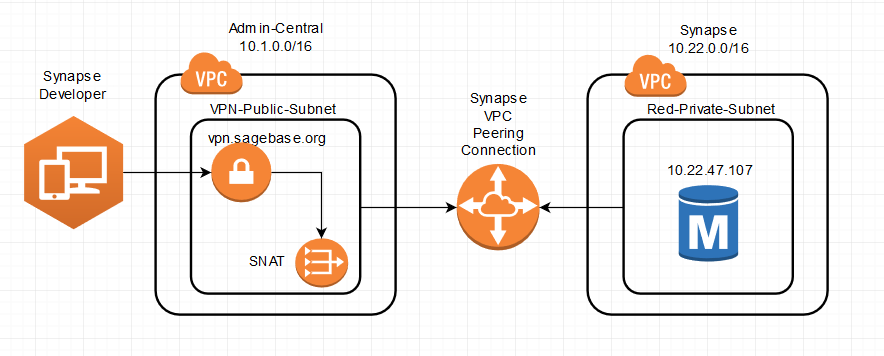

At a high level, a VPC is a named container for virtual network. For example, the Synapse production VPC was assigned a CIDR of 10.2220.0.0/16. This means Synapse networks contains 65,536 IP address between 10.2220.0.0 and 10.2220.255.255. The VPC is then further divided into subnets which can be declared as either Private or Public. Instances contained in Public subnets can be assigned public IP addresses and therefore can be seen on the public internet. Conversely, instances deployed in private subnets do not have public IP address and cannot be seen by the public internet. In fact, instance Instance in private subnets are only visible to machines deployed within the containing VPC. We will cover how internal developers can access to machines in private subnets in the next a later section. Image Added

Image Added

Image Removed

Image Removed

Figure 1. Synapse VPC

The Synapse VPC is divided into two six public and six twenty-four private subnets that span two six Availability Zone (AZ): us-east-1a and , us-east-b, us-east-c, us-east-d, us-east-e, us-east-f (see Figure 1). The deployment of all instances (EC2s and Databases) across two six zones ensure redundancy should an outage occur in a single zone (such as the event in April 2011), and broadens the availablity of instance types. For details on each subnet see Table 1.

| Subnet Name | Type | CIDR | First | Last | Total |

|---|

| PublicUsEast1a | Public | 10. |

22202222154096| 2048 |

| PublicUsEast1b | Public | 10. |

221620221622314096RedPrivateUsEast1a223222322239RedPrivateUsEast1b224022402247BluePrivateUsEast1a2248222255BluePrivateUsEast1b225622562263GreenPrivateUsEast1a| RedPrivateUsEast1a | Private | 10. |

226421226422712048GreenPrivateUsEast1b| RedPrivateUsEast1b | Private | 10. |

227221227222792048 | ...

| RedPrivateUsEast1c | Private | 10. |

...

...

...

Given that subnet address cannot overlap and must be allocated from a fix range of IP addresses (defined by the VPC), it would be awkward to dynamical allocate new subnets for each new stack. Instead, we decided to create fixed/permanent subnets to deploy new stacks into each week, thus returning to the blue green naming scheme. Since we occasionally, need to have three concurrently production-capable stacks we included red subnets. While we will continue to give each new stack a numeric designation, we will now also assign a color to each new stack. For example, stack 226 will be deployed to Red, 227 to Blue, 228 to Green, 229 to Red etc..

Public subnets

Any machine that must be publicly visible must be deployed to a public subnet. Since it is not possible to isolate public subnets from each other, there was little value in creating a public subnet for each color. One public subnet per availability zone is the minimums number possible. Each public subnet will contain the Elastic Beanstalk loadbalancers for each environment (portal, repo, works) of each stack. There is also a NAT Gateway deployed to each public subnet (need one NAT per AZ). We will cover the details of the NAT Gateways in the next section.

Each subnet has a Route Table that answers the question of how network traffic flows out of the subnet. Table 2 shows the route table used by both public subnets. Note: The most selective route will always be used.

...

Table 2. Public subnet route table entries

Each loadbalancer deployed in the public subnets is protected by the Security Groups that blocks access to all ports except 80 (HTTP) and 443 (HTTPS). See Table 3 for the details of the loadbalancer security groups.

...

Table 3. Security Groups for Elastic Beanstalk Loadbalancers deployed to public subnets

Private Subnets

As stated above, any machine deployed to a private subnet is not visible from outside the VPC. All Synapse EC2 and MySQL database instances are deployed within private subnets. More specifically, when a stack will be deployed to the permanent private subnets matching its configured color. For example, if stack 226 is configured to be Red, the EC2s and databases for that stack will be deployed to both of the Red private subnets. The private subnets for each color are assigned CIDRs such that all of the address within both private subnets fall within a CIDR for each color (see Table 4). This means the CIDR of each color can be used to isolate each stack. For example, instances in red subnets cannot see instances in blue subnets, or vise versa. Table 5 shows an example of the Security group applied to the EC2 instances within the red subnet using the color group CIDR. Table 6 shows an example of the Security Group applied to the MySQL databases deployed in the red private subnets.

...

Table 4. CIDR for each Color Group

...

Table 5. Security Group applied to EC2 instances in Red Private Subnets

...

Table 6. Security Group applied to MySQL RDS instances in Red Private Subnets

Just like with public subnets, each private subnet has a Route Table that describes how network traffic flows out of the subnet. Table 7 shows an example of the Route Table on the RedPrivateUsEast1a subnet.

...

Table 7. The Route Table on RedPrivateUsEast1a subnet

Internet Access from Within a Private Subnet

All of the EC2 instances deployed as part of Synapse need to make calls to AWS, Google, Jira, etc.. However, each instances is deployed in a private subnet without a public IP address so how does the response to and outside request make it back to the private machine? The answer is the Network Address Translator (NAT) Gateway. Figure 1 shows that each public subnet has a NAT Gateway, and Table 7 shows each private subnets has a route to 0.0.0.0/0 that points to the NAT Gateway within the same AZ. Each NAT has a public IP address and acts a proxy for outgoing requests from instances in the private subnets. When NAT receives an outgoing request from a private instance, it will replace the response address of the request with its own public address before forwarding the request. That way, the response goes back to the NAT, which then forwards the response to the original caller within the private subnet. Note: The NAT will not respond to requests that originate from the outside world so it cannot be used by gain access to the private machines.

While setting up the Synapse VPC it was our plan to utilizes Network Access Control Lists (Network ACLs) to further isolate each private subnet. In fact, we originally setup Network ACL similar to the security groups in Tables 3, 5, and 6. With the Network ACLs in place the EC2 instances were unable to communicate with the outside world (ping amazon.com failed). The following document outlines the important differences between Security Groups and Network ACLs: Comparison of Security Groups and Network ACLs. The explanation of the observed communication failures is summarized in Table 8.. In short, if a private subnet needs outgoing access on to 0.0.0.0/0, then you must also allow incoming access to 0.0.0.0/0 in the Network ACL.

...

Table 8. Important difference between Security Groups and Network ACLs

VPN Access to Private Subnets

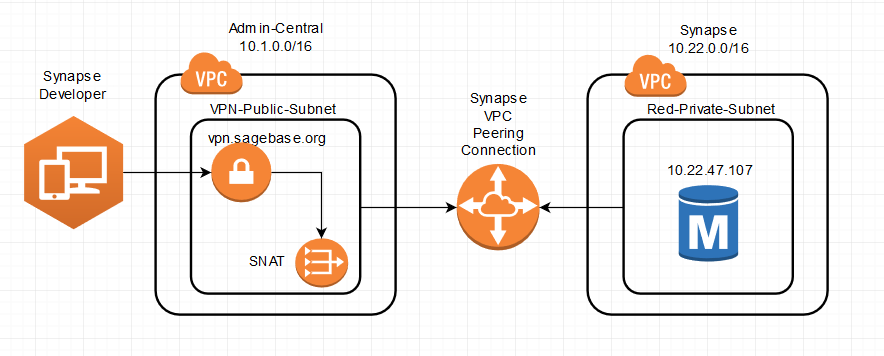

Earlier we stated that instances deployed to private subnets are only visible to machines within the same VPC. This is a true statement. So how does a developer connect to an instances deployed in a private subnet? The short answer: the developer must tunnel into the target VPC. Assume a developer needs to connect to the MySQL database at 10.22.47.107 as shown in Figure 2.

Image Removed

Image Removed

Figure 2. VPN tunnel to private subnet of a VPC

When a developer makes a VPN connection to vpn.sagebase.org, the VPN client will add a route for 10.22.0.0/16 on the developer's machine. This route will direct all network traffic with a destination within 10.22.0.0/16 to Sage-Admin-Central firewall. Once connected to the VPN, an attempt to connect to 10.22.47.107 will proceed as follows:

...

| 24 | 10.20.50.0 | 10.20.50.255 | 256 |

| RedPrivateUsEast1d | Private | 10.20.51.0/24 | 10.20.51.0 | 10.20.51.255 | 256 |

| RedPrivateUsEast1e | Private | 10.20.52.0/24 | 10.20.52.0 | 10.20.52.255 | 256 |

| RedPrivateUsEast1f | Private | 10.20.53.0/24 | 10.20.53.0 | 10.20.53.255 | 256 |

| BluePrivateUsEast1a | Private | 10.20.56.0/24 | 10.20.56.0 | 10.20.56.255 | 256 |

| BluePrivateUsEast1b | Private | 10.20.57.0/24 | 10.20.57.0 | 10.20.57.255 | 256 |

| BluePrivateUsEast1c | Private | 10.20.58.0/24 | 10.20.58.0 | 10.20.58.255 | 256 |

| BluePrivateUsEast1d | Private | 10.20.59.0/24 | 10.20.59.0 | 10.20.59.255 | 256 |

| BluePrivateUsEast1e | Private | 10.20.60.0/24 | 10.20.60.0 | 10.20.60.255 | 256 |

| BluePrivateUsEast1f | Private | 10.20.61.0/24 | 10.20.61.0 | 10.20.61.255 | 256 |

| GreenPrivateUsEast1a | Private | 10.20.64.0/24 | 10.20.64.0 | 10.20.64.255 | 256 |

| GreenPrivateUsEast1b | Private | 10.20.65.0/24 | 10.20.65.0 | 10.20.65.255 | 256 |

| GreenPrivateUsEast1c | Private | 10.20.66.0/24 | 10.20.66.0 | 10.20.66.255 | 256 |

| GreenPrivateUsEast1d | Private | 10.20.67.0/24 | 10.20.67.0 | 10.20.67.255 | 256 |

| GreenPrivateUsEast1e | Private | 10.20.68.0/24 | 10.20.68.0 | 10.20.68.255 | 256 |

| GreenPrivateUsEast1f | Private | 10.20.69.0/24 | 10.20.69.0 | 10.20.69.255 | 256 |

| OrangePrivateUsEast1a | Private | 10.20.72.0/24 | 10.20.72.0 | 10.20.72.255 | 256 |

| OrangePrivateUsEast1b | Private | 10.20.73.0/24 | 10.20.73.0 | 10.20.73.255 | 256 |

| OrangePrivateUsEast1c | Private | 10.20.74.0/24 | 10.20.74.0 | 10.20.74.255 | 256 |

| OrangePrivateUsEast1d | Private | 10.20.75.0/24 | 10.20.75.0 | 10.20.75.255 | 256 |

| OrangePrivateUsEast1e | Private | 10.20.76.0/24 | 10.20.76.0 | 10.20.76.255 | 256 |

| OrangePrivateUsEast1f | Private | 10.20.77.0/24 | 10.20.77.0 | 10.20.77.255 | 256 |

Table 1. Synapse VPC 10.22.0.0/16 subnets

Given that subnet address cannot overlap and must be allocated from a fix range of IP addresses (defined by the VPC), it would be awkward to dynamical allocate new subnets for each new stack. Instead, we decided to create fixed/permanent subnets to deploy new stacks into each week, thus returning to the blue green naming scheme. Since we occasionally need to have three production-capable stacks running at a time, we included a red subnets. We will continue to give each new stack a numeric designation, but we will also assign a color to each new stack. Each color will be assigned in a round-robin manner. For example, stack 226 will be deployed to Red, 227 to Blue, 228 to Green, 229 to Red etc.. The Orange subnets are reserved for shared resources such as the ID generator database.

Public subnets

Any machine that must be publicly visible must be deployed to a public subnet. Since it is not possible to isolate public subnets from each other, there was little value in creating a public subnet for each color. Instead, one public subnet per availability zone was created. Each public subnet will contain the Elastic Beanstalk loadbalancers for each environment (portal, repo, works) of each stack. There is also a NAT Gateway deployed to each public subnet (need one NAT per AZ). We will cover the details of the NAT Gateways in a later section.

Each subnet has a Route Table that answers the question of how network traffic flows out of the subnet. Table 2 shows the route table used by both public subnets. Note: The most selective route will always be used.

| Destination | Target | Description |

|---|

| 10.20.0.0/16 | local | The default entry that identifies all address within this VPC (10.20.0.0/16) can be found within this VPC (local) |

| 10.1.0.0/16 | VPN Peering Connection | If the destination IP address in the VPN VPC (10.1.0.0/16), then use the VPC peering connection that connects the two VPCs |

| 0.0.0.0/0 | Internet Gateway | If the destination is any other IP address (0.0.0.0/0), then use the VPC's Internet Gateway. |

Table 2. Public subnet route table entries

Each loadbalancer deployed in the public subnets is protected by firewall called a Security Groups that blocks access to all ports except 80 (HTTP) and 443 (HTTPS). See Table 3 for the details of the loadbalancer security groups.

| Type | CIDR | Port Range | Description |

|---|

| HTTP | 0.0.0.0/0 | 80 | Allow all traffic in on port 80 (HTTP) |

| HTTPS | 0.0.0.0/0 | 443 | Allow all traffic in on port 443 (HTTPS) |

Table 3. Security Groups for Elastic Beanstalk Loadbalancers deployed to public subnets

Private Subnets

As stated above, any machine deployed to a private subnet is not visible from outside the VPC. Each EC2 and database instances of a stack will be deployed to the two private subnets of a single color. For example, if stack 226 is configured to be Red, the EC2s and databases for that stack will be deployed to both RedPrivateUsEast1a and RedPrivateUsEast1b. The private subnets for each color are assigned CIDRs such that all of the address within both private subnets fall within a single CIDR (see Table 4). This means the CIDR of each color can be used to isolate each stack. For example, instances in red subnets cannot see instances in blue subnets, or vise versa. Table 5 shows an example of the Security group applied to the EC2 instances within the red subnet using the color group CIDR. Table 6 shows an example of the Security Group applied to the MySQL databases deployed in the red private subnets.

| Color Group | CIDR | First | Last | Total |

|---|

| Red | 10.20.48.0/21 | 10.20.48.0 | 10.20.53.255 | 2048 |

| Blue | 10.20.56.0/21 | 10.20.56.0 | 10.22.63.255 | 2048 |

| Green | 10.20.64.0/21 | 10.20.64.0 | 10.22.69.255 | 2048 |

Table 4. CIDR for each Color Group

| Type | CIDR/SG | Port Rang | Description |

|---|

| HTTP | 10.20.48.0/21 | 80 | Allow machines within either Red private subnet to connect with HTTP |

| SSH | 10.1.0.0/16 | 22 | Allows traffic from the Sage VPN (10.1.0.0/16) to connect with SSH |

| HTTP | Loadbalancer Security Group | 80 | Allow the loadbalancers from this stack to connect with HTTP |

Table 5. Security Group applied to EC2 instances in Red Private Subnets

| Type | CIDR | Port Range | Description |

|---|

| MySQL | 10.20.48.0/21 | 3306 | Allows machines within either Red private subnet to connect on port 3306 |

| MySQL | 10.1.0.0/16 | 3306 | Allows traffic from the Sage VPN (10.1.0.0/16) to connect on port 3306 |

Table 6. Security Group applied to MySQL RDS instances in Red Private Subnets

Just like with public subnets, each private subnet has a Route Table that describes how network traffic flows out of the subnet. Table 7 shows an example of the Route Table on the RedPrivateUsEast1a subnet.

| Destination | Target | Description |

|---|

| 10.20.0.0/16 | local | The default entry that identifies all address within this VPC (10.20.0.0/16) can be found within this VPC (local) |

| 10.1.0.0/16 | VPN Peering Connection | If the destination IP address in the VPN VPC (10.1.0.0/16), then use the VPC peering connection that connects the two VPCs |

| 0.0.0.0/0 | NAT Gateway us-east-1a | If the destination is any other IP address (0.0.0.0/0), then use the NAT Gateway that is within the same availability zone. |

Table 7. The Route Table on RedPrivateUsEast1a subnet

Internet Access from Within a Private Subnet

All of the EC2 instances of Synpase need to make calls to AWS, Google, Jira, etc.. However, each instances is deployed in a private subnet without a public IP address so how does the response to and outside request make it back to the private machine? The answer is the Network Address Translator (NAT) Gateway. Figure 1 shows that each public subnet has a NAT Gateway, and Table 7 shows each private subnets has a route to 0.0.0.0/0 that points to the NAT Gateway within the same AZ. Each NAT has a public IP address and acts a proxy for outgoing requests from instances in the private subnets. When NAT receives an outgoing request from a private instance, it will replace the response address of the request with its own public address before forwarding the request. As a result, the response goes back to the NAT, which then forwards the response to the original caller within the private subnet. Note: The NAT will not respond to requests that originate from the outside world so it cannot be used by gain access to the private machines.

While setting up the Synapse VPC it was our plan to utilizes Network Access Control Lists (Network ACLs) to further isolate each private subnet. In fact, we originally setup Network ACL similar to the security groups in Tables 3, 5, and 6. With the Network ACLs in place the EC2 instances were unable to communicate with the outside world (for example, 'ping amazon.com' would hang). The following document outlines important differences between Security Groups and Network ACLs: Comparison of Security Groups and Network ACLs. The explanation of the observed communication failures is summarized in Table 8.. In short, if a private subnet needs outgoing access on to 0.0.0.0/0, then you must also allow incoming access to 0.0.0.0/0 in the Network ACL.

| Security Group | Network ACL |

|---|

| is stateful: Return traffic is automatically allowed, regardless of any rules | Is stateless: Return traffic must be explicitly allowed by rules |

Table 8. Important difference between Security Groups and Network ACLs

VPN Access to Private Subnets

Earlier we stated that instances deployed to private subnets are only visible to machines within the same VPC. This is a true statement. So how does a developer connect to an instances deployed in a private subnet? The short answer: the developer must tunnel into the target VPC. Figure 2. shows an example of an of how a developer connects to the MySQL database at 10.20.47.107 contained within a private subnet.

Image Added

Image Added

Figure 2. VPN tunnel to private subnet of a VPC

When a developer makes a VPN connection to vpn.sagebase.org, the VPN client will add a route for 10.20.0.0/16 on the developer's machine. This route will direct all network traffic with a destination within 10.20.0.0/16 to Sage-Admin-Central firewall. Once connected to the VPN, an attempt to connect to 10.20.47.107 will proceed as follows:

- The call to 10.20.47.107 will be directed to the Sage Firewall

- If the VPN users belongs to the 'Synapse Developers' group the request will be forwarded to the SNAT

- The SNAT (a type of NAT) will replace the caller's real address with its own address: 10.1.33.107 and forward the request

- A route table entry directs all traffic with a destination of 10.20.0.0/16 to the Synapse VPC Peering connection.

- The peering connection has been granted access to allow all traffic between the two VPC (10.1.0.0/16 and 10.20.0.0/16) so the request can tunnel to the Synapse VPC

- Once in the Synapse VPC, requestor's address will be 10.1.33.107. Since the database security group allows 10.1.0.0/16 to connect on port 3306, the connection is allowed if the correct database username/password is provided.

Cloudformation

The entire Synapse stack is built using the Java code from the Synapse-Stack-Builder project. In the past, the stack builder would create AWS resources by directly calling AWS services via the AWS Java client. One of the stated goals for switching Synapse to use a VPC included switching the stack building process to Cloudeformation templates. While cloudformation templates can be awkward to create, using them to build/manage a stack has several advantages:

- A template can be read to discover exactly how a stack was created.

- The creation or update of a template acts as a quasi-transaction, so either all of the changes are either applied successfully or rolled-back completely.

- Cleaning up a stack is now as simple as deleting the stack. Deleting the resources of a stack was previously a manual process.

The resources of the Synapse stack are manged as five separate cloud formation stacks templates shown in Table 9.. All template JSON files are created by merging actual templates with input parameters using Apache's Velocity.

| Stack Name | Frequency | Description | Example |

|---|

| synapse-prod-vpc | one time | A one-time stack to create the Synapse VPC and associated subnets, route tables, network ACLs, security groups, and VPC peering | | View file |

|---|

| name | synapse-prod-vpc.json |

|---|

| height | 250 |

|---|

|

|

| prod-<instance>-shared-resources | once per week | Created once each week (i.e. prod-226-shared-resources) to build all of the of the shared resources of a given stack including MySQL databases and the Elastic Beanstalk Application. | | View file |

|---|

| name | prod-226-shared-resources.json |

|---|

| height | 250 |

|---|

|

|

| repo-prod-<instance>-<number> | one per version per week | Created for each version of the repository-services deployed per week. For example, if we needed two separate instances of repository services for prod-226, then the first would be repo-prod-226-0 and the second would be repo-prod-226-1. Creates the Elastic Beanstalk Environment and CNAME for the repository-services. | | View file |

|---|

| name | workers-prod-226-0.json |

|---|

| height | 250 |

|---|

|

|

| workers-prod-<instance>-<number> | one per version per week | Created for each version of the workers deployed per week. Similar to repo-prod. Creates the Elastic Beanstalk Environment and CNAME for the workers. | | View file |

|---|

| name | workers-prod-226-0.json |

|---|

| height | 250 |

|---|

|

|

| portal-prod-<instance>-<number> | one per version per week | Created for each version of the portal deployed per week. Similar to repo-prod. Creates the Elastic Beanstalk Environment and CNAME for the portal. | | View file |

|---|

| name | portal-prod-226-0.json |

|---|

| height | 250 |

|---|

|

|

Table 9. The cloudformation templates of Synapse